Microsoft Recalls Controversial AI Feature: A Deep Dive into Privacy Concerns

Microsoft’s new AI feature, Recall, designed to enhance user experience, has faced significant backlash due to privacy concerns. This feature, integrated into Windows 11, captures screenshots of users’ activities to provide context-aware assistance. However, the implementation raised alarm among security experts and users alike.

What is Microsoft Recall?

The Concept

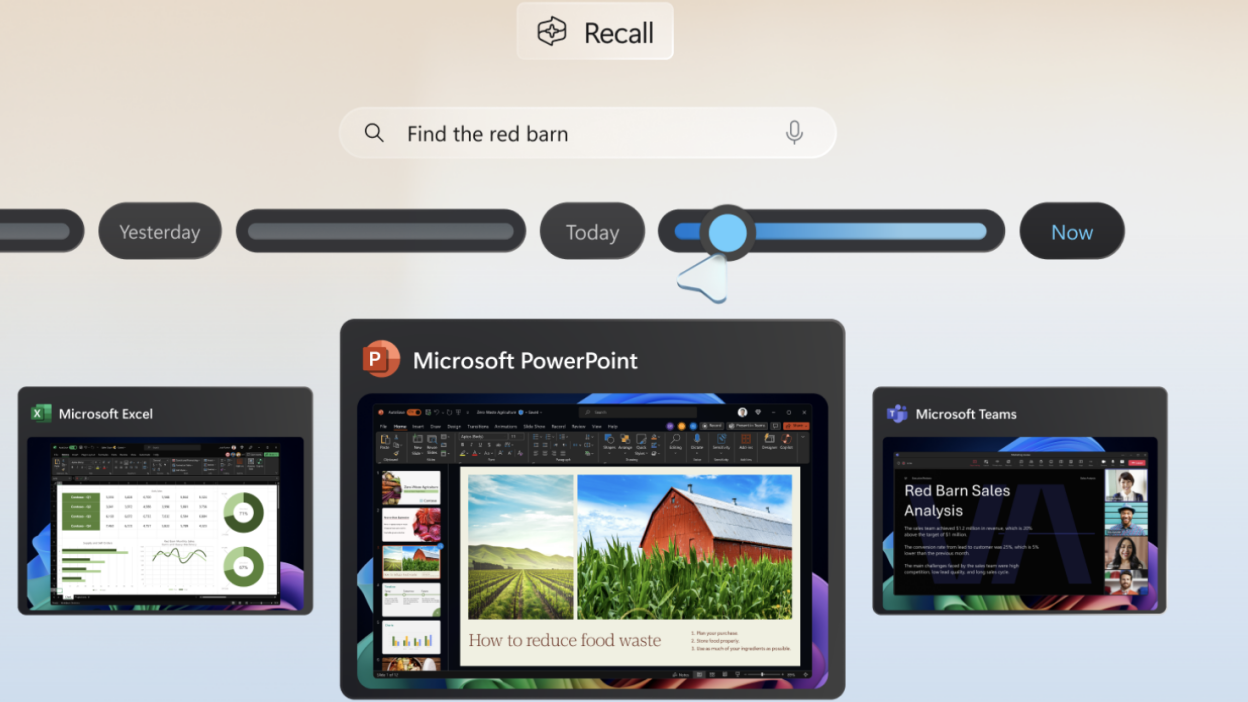

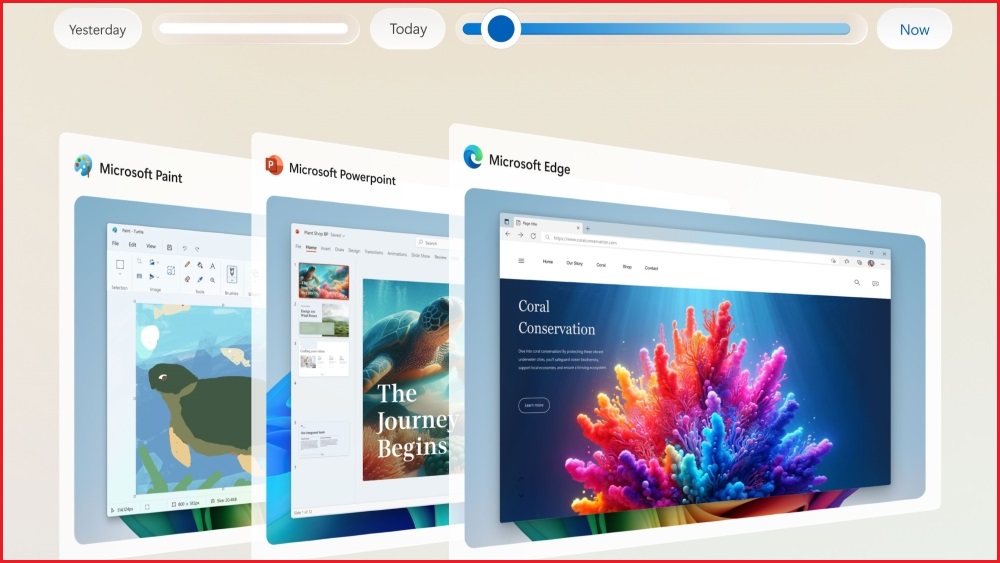

Recall is an AI-based feature embedded in Windows 11, aimed at enhancing productivity by tracking and recalling users’ past activities. The feature captures screenshots of everything displayed on the user’s desktop at regular intervals. The idea is to allow users to search and retrieve past activities efficiently. For example, users can ask the AI, “What was the movie I looked up yesterday?” and Recall will provide the exact information by analyzing the stored screenshots.

Privacy Concerns

The primary concern is the extent of surveillance this feature implies. Since Recall takes screenshots of all activities, including potentially sensitive information like passwords, bank statements, and personal messages, it poses a significant privacy risk. Critics argue that this level of monitoring is invasive and that the data collected could be exploited if not properly secured.

Security Risks and Public Backlash

Data Storage and Encryption Issues

Initial reports revealed that the screenshots captured by Recall were stored in a local folder on the computer, often unencrypted. This meant that anyone with administrative access to the computer could potentially retrieve these screenshots. The lack of encryption and secure storage mechanisms made the data vulnerable to exploitation by malicious actors.

Reaction from the Public and Experts

The public and cybersecurity experts reacted strongly against the default activation of Recall, citing severe privacy and security risks. Many argued that users should explicitly opt-in to such features rather than being automatically enrolled. The potential for misuse, especially in corporate environments where employers could have unfettered access to employees’ activities, further fueled the backlash.

Microsoft’s Response and Changes

Opt-In Mechanism

In response to the widespread criticism, Microsoft announced that it would modify the implementation of Recall. The feature will now be disabled by default, requiring users to opt-in explicitly. This change aims to give users more control over their data and alleviate some privacy concerns.

Enhanced Security Measures

Microsoft also pledged to improve the security of the stored data. Future updates will include robust encryption for the screenshots and the associated database. Additionally, access to the stored data will require user authentication, adding another layer of security to prevent unauthorized access.

The Broader Context of AI and Privacy

Comparisons with Other AI Assistants

AI assistants like Siri, Google Assistant, and Alexa also collect and process user data to provide personalized experiences. However, these assistants typically do not take continuous screenshots of user activities. Instead, they rely on voice commands and contextual data to function. The explicit collection of visual data by Recall distinguishes it from other AI assistants and heightens privacy concerns.

The Role of Privacy in AI Development

The Recall controversy highlights the critical balance between functionality and privacy in AI development. While AI can significantly enhance user experience by providing context-aware assistance, it must be implemented with stringent privacy safeguards. Users need assurance that their data is protected and used ethically.

Conclusion: A Step Towards Responsible AI

Microsoft’s decision to revise the implementation of Recall is a positive step towards addressing privacy concerns. By making the feature opt-in and enhancing data security measures, Microsoft aims to regain user trust and demonstrate a commitment to responsible AI development.

As AI continues to evolve, companies must prioritize user privacy and transparency. Features like Recall have the potential to transform how we interact with our devices, but they must be designed with careful consideration of the implications for user privacy and data security. This case serves as a reminder that innovation in AI should always be balanced with ethical considerations and robust safeguards to protect user data.